What Do Base And Limit Registers Do

Main Memory

References:

- Abraham Silberschatz, Greg Gagne, and Peter Baer Galvin, "Operating System Concepts, Ninth Edition ", Chapter 8

eight.ane Background

- Evidently memory accesses and memory direction are a very important office of modern computer operation. Every educational activity has to be fetched from memory before it tin can be executed, and most instructions involve retrieving information from memory or storing information in memory or both.

- The advent of multi-tasking OSes compounds the complexity of retentivity management, because because as processes are swapped in and out of the CPU, then must their lawmaking and data be swapped in and out of memory, all at loftier speeds and without interfering with any other processes.

- Shared retentivity, virtual retention, the classification of memory equally read-merely versus read-write, and concepts like copy-on-write forking all further complicate the issue.

8.1.1 Basic Hardware

- Information technology should be noted that from the memory chips bespeak of view, all memory accesses are equivalent. The memory hardware doesn't know what a particular part of memory is being used for, nor does it care. This is well-nigh true of the OS as well, although not entirely.

- The CPU tin can only admission its registers and main memory. Information technology cannot, for case, brand directly access to the hard drive, so any information stored there must offset exist transferred into the main memory chips before the CPU tin can piece of work with it. ( Device drivers communicate with their hardware via interrupts and "memory" accesses, sending short instructions for example to transfer data from the hard bulldoze to a specified location in main memory. The disk controller monitors the bus for such instructions, transfers the information, and then notifies the CPU that the information is there with another interrupt, simply the CPU never gets direct access to the deejay. )

- Memory accesses to registers are very fast, generally i clock tick, and a CPU may be able to execute more than than 1 automobile educational activity per clock tick.

- Memory accesses to main memory are comparatively slow, and may accept a number of clock ticks to complete. This would require intolerable waiting by the CPU if it were not for an intermediary fast retentivity enshroud built into most modern CPUs. The bones idea of the cache is to transfer chunks of memory at a time from the main memory to the cache, and then to access individual memory locations one at a fourth dimension from the cache.

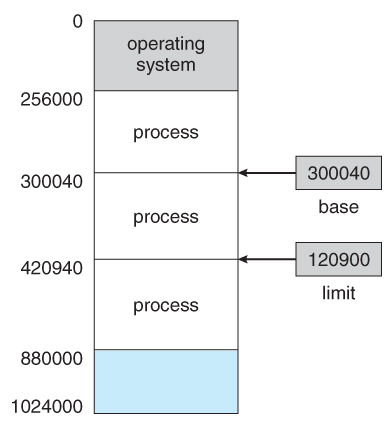

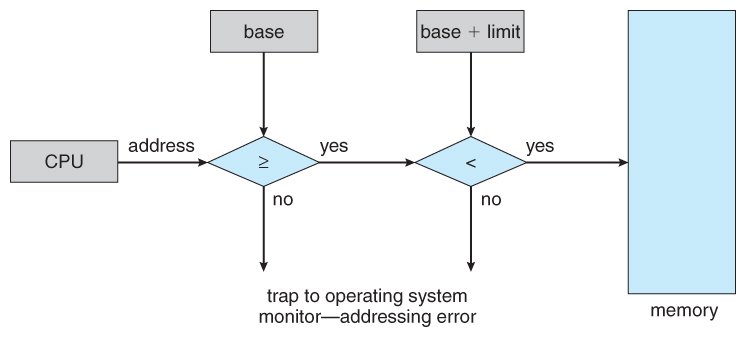

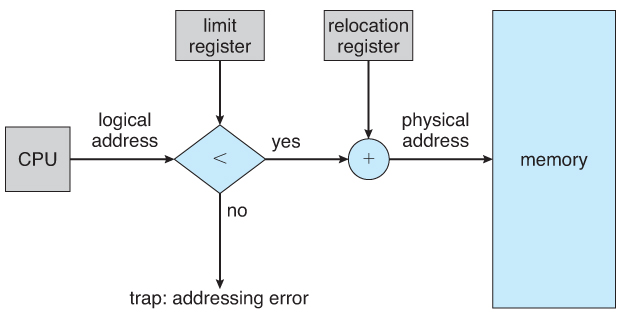

- User processes must exist restricted so that they only access memory locations that "vest" to that particular process. This is commonly implemented using a base of operations register and a limit annals for each process, as shown in Figures 8.1 and 8.ii below. Every memory admission made by a user procedure is checked confronting these two registers, and if a retention access is attempted outside the valid range, then a fatal error is generated. The Bone plainly has admission to all existing retentivity locations, as this is necessary to swap users' lawmaking and data in and out of memory. It should besides be obvious that changing the contents of the base and limit registers is a privileged activity, allowed just to the Bone kernel.

Figure 8.1 - A base of operations and a limit register define a logical addresss space

Figure 8.2 - Hardware address protection with base of operations and limit registers8.1.2 Address Binding

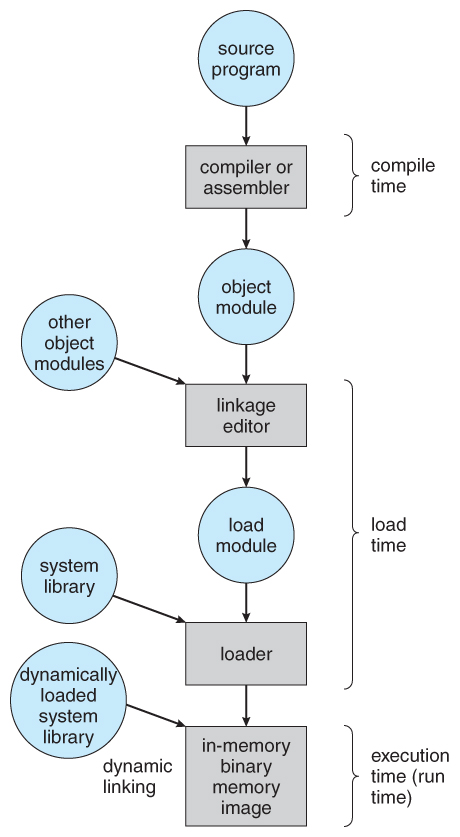

- User programs typically refer to memory addresses with symbolic names such as "i", "count", and "averageTemperature". These symbolic names must be mapped or bound to physical memory addresses, which typically occurs in several stages:

- Compile Time - If it is known at compile fourth dimension where a program will reside in physical memory, then accented code can be generated by the compiler, containing actual physical addresses. However if the load address changes at some later time, then the program will have to be recompiled. DOS .COM programs use compile time bounden.

- Load Fourth dimension - If the location at which a program will exist loaded is not known at compile time, then the compiler must generate relocatable code , which references addresses relative to the start of the program. If that starting address changes, then the program must be reloaded but not recompiled.

- Execution Time - If a program can exist moved effectually in memory during the form of its execution, so binding must be delayed until execution time. This requires special hardware, and is the method implemented past almost modern OSes.

- Effigy 8.3 shows the diverse stages of the bounden processes and the units involved in each stage:

Effigy viii.3 - Multistep processing of a user program8.1.3 Logical Versus Concrete Address Infinite

- The address generated by the CPU is a logical address , whereas the address actually seen by the memory hardware is a physical accost .

- Addresses bound at compile time or load time have identical logical and physical addresses.

- Addresses created at execution time, notwithstanding, accept dissimilar logical and concrete addresses.

- In this case the logical address is besides known as a virtual address , and the two terms are used interchangeably by our text.

- The set up of all logical addresses used by a program composes the logical address space , and the set of all respective physical addresses composes the physical accost infinite.

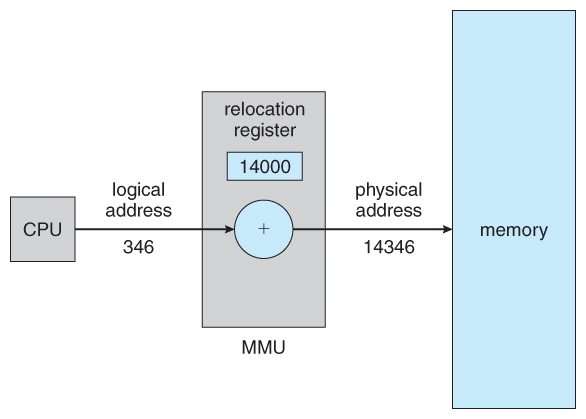

- The run time mapping of logical to physical addresses is handled past the memory-management unit, MMU .

- The MMU can accept on many forms. One of the simplest is a modification of the base of operations-register scheme described earlier.

- The base register is at present termed a relocation register , whose value is added to every memory request at the hardware level.

- Notation that user programs never meet concrete addresses. User programs work entirely in logical address space, and any memory references or manipulations are done using purely logical addresses. Only when the address gets sent to the concrete memory chips is the concrete memory address generated.

Figure 8.iv - Dynamic relocation using a relocation register8.one.4 Dynamic Loading

- Rather than loading an entire programme into memory at one time, dynamic loading loads upwards each routine as information technology is called. The advantage is that unused routines need never be loaded, reducing full memory usage and generating faster program startup times. The downside is the added complication and overhead of checking to come across if a routine is loaded every time it is chosen and so and so loading it upward if it is not already loaded.

viii.ane.v Dynamic Linking and Shared Libraries

- With static linking library modules get fully included in executable modules, wasting both disk space and main retentiveness usage, because every plan that included a sure routine from the library would have to take their own copy of that routine linked into their executable code.

- With dynamic linking , however, only a stub is linked into the executable module, containing references to the bodily library module linked in at run time.

- This method saves deejay space, considering the library routines do non need to exist fully included in the executable modules, merely the stubs.

- We will too learn that if the code section of the library routines is reentrant , ( meaning it does non modify the lawmaking while information technology runs, making it safe to re-enter it ), then master memory can exist saved by loading only one copy of dynamically linked routines into retention and sharing the code amid all processes that are meantime using information technology. ( Each process would accept their own re-create of the data section of the routines, but that may be minor relative to the code segments. ) Obviously the Bone must manage shared routines in memory.

- An added benefit of dynamically linked libraries ( DLLs , too known as shared libraries or shared objects on UNIX systems ) involves piece of cake upgrades and updates. When a program uses a routine from a standard library and the routine changes, then the program must exist re-built ( re-linked ) in order to incorporate the changes. However if DLLs are used, and so as long as the stub doesn't change, the programme tin be updated merely by loading new versions of the DLLs onto the system. Version information is maintained in both the program and the DLLs, so that a programme tin can specify a particular version of the DLL if necessary.

- In exercise, the first time a programme calls a DLL routine, the stub will recognize the fact and will replace itself with the actual routine from the DLL library. Further calls to the same routine will access the routine directly and non incur the overhead of the stub access. ( Following the UML Proxy Pattern . )

- ( Additional information regarding dynamic linking is bachelor at http://www.iecc.com/linker/linker10.html )

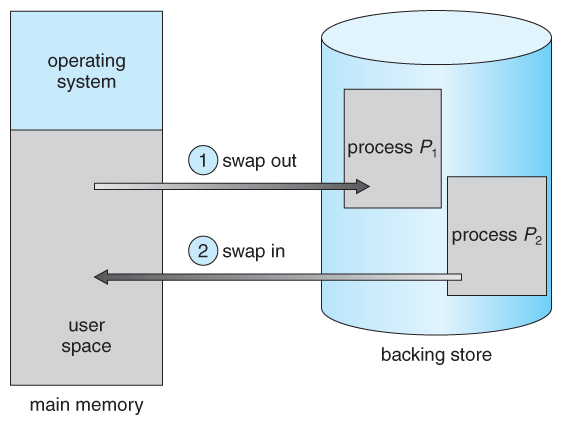

8.ii Swapping

- A procedure must be loaded into memory in lodge to execute.

- If there is not enough memory available to keep all running processes in retentiveness at the same time, and then some processes who are not currently using the CPU may accept their memory swapped out to a fast local disk called the bankroll shop.

8.2.one Standard Swapping

- If compile-time or load-time address bounden is used, then processes must exist swapped back into the same retentiveness location from which they were swapped out. If execution fourth dimension binding is used, so the processes can be swapped dorsum into whatsoever bachelor location.

- Swapping is a very slow process compared to other operations. For instance, if a user process occupied 10 MB and the transfer rate for the bankroll store were 40 MB per 2nd, and then it would take one/4 2nd ( 250 milliseconds ) just to do the data transfer. Adding in a latency lag of eight milliseconds and ignoring head seek time for the moment, and further recognizing that swapping involves moving old data out also as new information in, the overall transfer time required for this bandy is 512 milliseconds, or over half a second. For efficient processor scheduling the CPU time slice should be significantly longer than this lost transfer time.

- To reduce swapping transfer overhead, it is desired to transfer as little data every bit possible, which requires that the system know how much memory a process is using, every bit opposed to how much information technology might use. Programmers tin help with this past freeing up dynamic memory that they are no longer using.

- It is important to swap processes out of retentivity only when they are idle, or more to the point, but when there are no pending I/O operations. ( Otherwise the pending I/O operation could write into the wrong process'south memory infinite. ) The solution is to either swap only totally idle processes, or practise I/O operations just into and out of OS buffers, which are then transferred to or from process'southward main memory as a second step.

- Near modernistic OSes no longer use swapping, because information technology is too tedious and there are faster alternatives available. ( e.one thousand. Paging. ) However some UNIX systems will still invoke swapping if the organisation gets extremely full, and so discontinue swapping when the load reduces again. Windows 3.1 would utilise a modified version of swapping that was somewhat controlled by the user, swapping procedure's out if necessary then just swapping them back in when the user focused on that particular window.

Figure eight.5 - Swapping of two processes using a disk every bit a bankroll store

viii.ii.2 Swapping on Mobile Systems ( New Section in ninth Edition )

- Swapping is typically not supported on mobile platforms, for several reasons:

- Mobile devices typically apply wink memory in place of more spacious hard drives for persistent storage, so in that location is not as much space available.

- Wink memory tin merely be written to a express number of times earlier it becomes unreliable.

- The bandwidth to flash retentiveness is also lower.

- Apple'southward IOS asks applications to voluntarily complimentary up retentiveness

- Read-merely information, eastward.k. code, is just removed, and reloaded after if needed.

- Modified information, e.g. the stack, is never removed, only . . .

- Apps that fail to gratis upwardly sufficient retentiveness tin can be removed by the Bone

- Android follows a similar strategy.

- Prior to terminating a procedure, Android writes its application state to flash memory for quick restarting.

eight.3 Face-to-face Memory Allotment

- One approach to memory direction is to load each process into a face-to-face space. The operating organisation is allocated space first, usually at either low or high memory locations, and then the remaining available memory is allocated to processes as needed. ( The Bone is usually loaded low, because that is where the interrupt vectors are located, but on older systems part of the OS was loaded high to make more than room in low retentivity ( inside the 640K barrier ) for user processes. )

eight.iii.i Memory Protection ( was Memory Mapping and Protection )

- The organisation shown in Figure 8.6 below allows protection against user programs accessing areas that they should not, allows programs to be relocated to different retentivity starting addresses as needed, and allows the memory space devoted to the Bone to grow or shrink dynamically every bit needs alter.

Figure 8.6 - Hardware support for relocation and limit registers8.3.2 Memory Allocation

- 1 method of allocating contiguous retentivity is to divide all available retentivity into equal sized partitions, and to assign each process to their ain sectionalization. This restricts both the number of simultaneous processes and the maximum size of each process, and is no longer used.

- An alternating approach is to go on a list of unused ( gratuitous ) retention blocks ( holes ), and to notice a pigsty of a suitable size whenever a process needs to exist loaded into retentiveness. There are many different strategies for finding the "all-time" allotment of memory to processes, including the iii nigh ordinarily discussed:

- First fit - Search the listing of holes until one is found that is big enough to satisfy the request, and assign a portion of that hole to that process. Whatever fraction of the hole not needed by the request is left on the complimentary listing as a smaller pigsty. Subsequent requests may start looking either from the showtime of the list or from the bespeak at which this search ended.

- Best fit - Allocate the smallest hole that is big enough to satisfy the request. This saves big holes for other process requests that may demand them after, but the resulting unused portions of holes may exist too small to exist of any apply, and will therefore be wasted. Keeping the free list sorted can speed up the process of finding the correct hole.

- Worst fit - Allocate the largest pigsty available, thereby increasing the likelihood that the remaining portion will be usable for satisfying hereafter requests.

- Simulations evidence that either beginning or best fit are better than worst fit in terms of both time and storage utilization. First and best fits are about equal in terms of storage utilization, just kickoff fit is faster.

8.3.3. Fragmentation

- All the memory allocation strategies suffer from external fragmentation , though offset and best fits feel the problems more and then than worst fit. External fragmentation means that the available memory is broken up into lots of little pieces, none of which is big enough to satisfy the next retentiveness requirement, although the sum total could.

- The amount of memory lost to fragmentation may vary with algorithm, usage patterns, and some design decisions such as which stop of a hole to allocate and which end to save on the gratis list.

- Statistical analysis of showtime fit, for case, shows that for North blocks of allocated retention, some other 0.v N will exist lost to fragmentation.

- Internal fragmentation besides occurs, with all memory allotment strategies. This is caused by the fact that retentivity is allocated in blocks of a stock-still size, whereas the actual memory needed will rarely exist that verbal size. For a random distribution of memory requests, on the average 1/2 cake will exist wasted per memory request, because on the boilerplate the last allocated block will be only half full.

- Note that the same effect happens with hard drives, and that modern hardware gives us increasingly larger drives and memory at the expense of ever larger block sizes, which translates to more memory lost to internal fragmentation.

- Some systems use variable size blocks to minimize losses due to internal fragmentation.

- If the programs in retentivity are relocatable, ( using execution-time accost bounden ), then the external fragmentation problem can exist reduced via compaction , i.due east. moving all processes down to one finish of physical memory. This only involves updating the relocation annals for each process, as all internal piece of work is washed using logical addresses.

- Another solution as we will see in upcoming sections is to allow processes to use non-contiguous blocks of physical memory, with a split relocation register for each block.

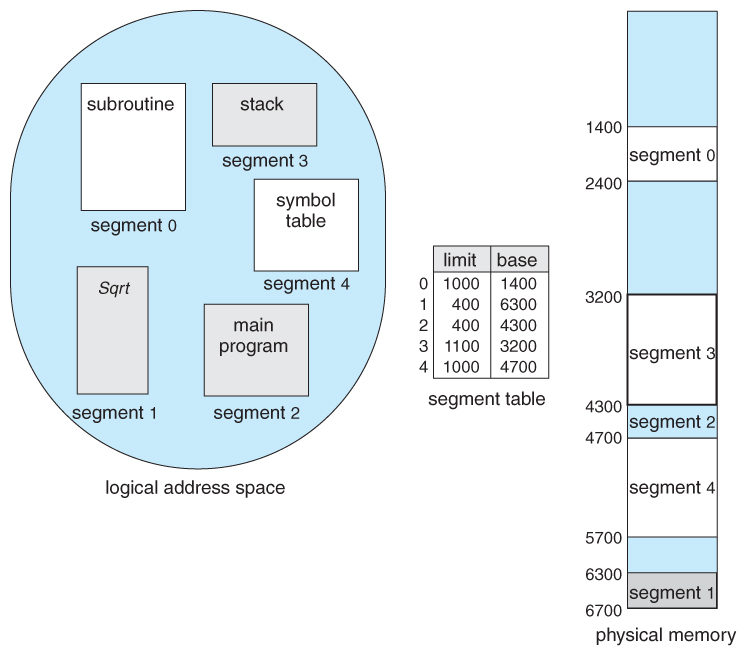

8.four Segmentation

8.4.i Basic Method

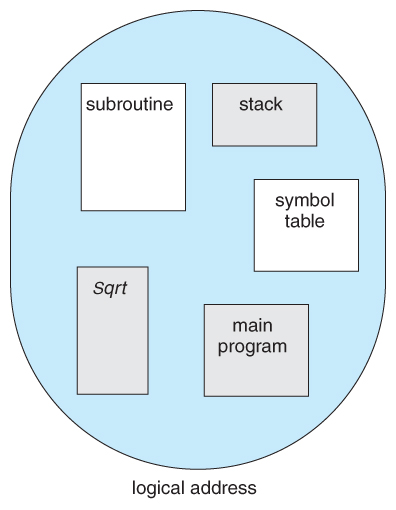

- Nigh users ( programmers ) do not call back of their programs equally existing in i continuous linear address space.

- Rather they tend to call up of their memory in multiple segments , each dedicated to a particular use, such every bit lawmaking, information, the stack, the heap, etc.

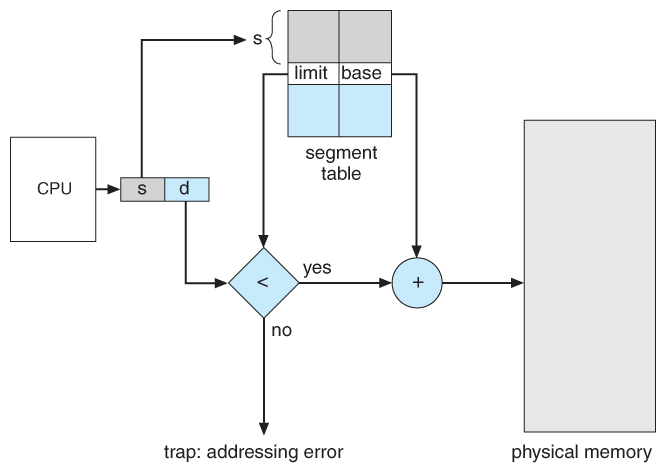

- Memory sectionalisation supports this view by providing addresses with a segment number ( mapped to a segment base of operations accost ) and an offset from the beginning of that segment.

- For example, a C compiler might generate 5 segments for the user code, library code, global ( static ) variables, the stack, and the heap, as shown in Figure 8.7:

Effigy 8.seven Developer'due south view of a program.

viii.iv.2 Partitioning Hardware

- A segment tabular array maps segment-offset addresses to physical addresses, and simultaneously checks for invalid addresses, using a system like to the page tables and relocation base of operations registers discussed previously. ( Notation that at this betoken in the discussion of division, each segment is kept in face-to-face memory and may exist of different sizes, just that division tin as well be combined with paging as we shall see shortly. )

Figure 8.8 - Segmentation hardware

Figure 8.nine - Example of segmentation

8.five Paging

- Paging is a memory management scheme that allows processes physical memory to be discontinuous, and which eliminates problems with fragmentation by allocating retentivity in equal sized blocks known equally pages .

- Paging eliminates most of the bug of the other methods discussed previously, and is the predominant memory management technique used today.

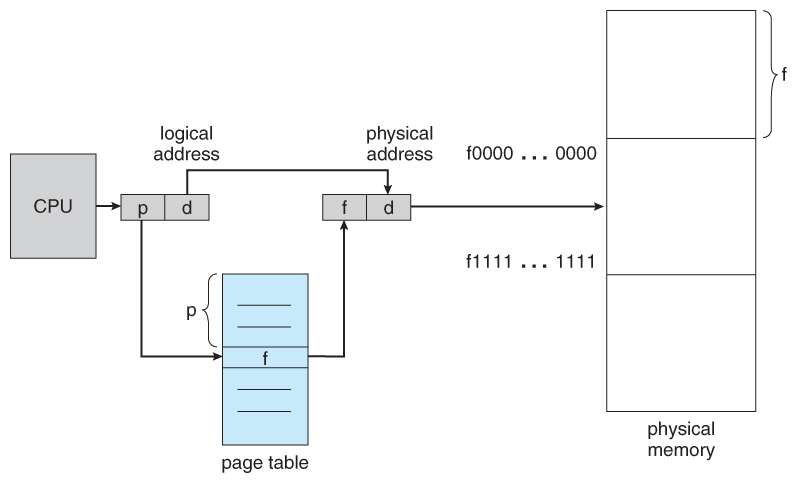

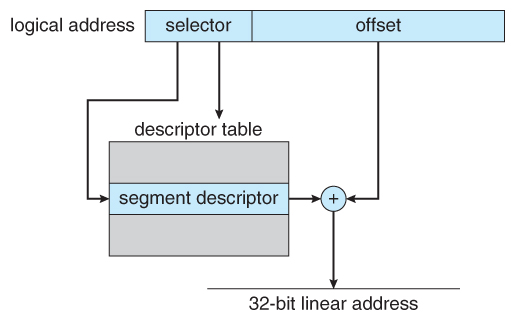

viii.5.ane Basic Method

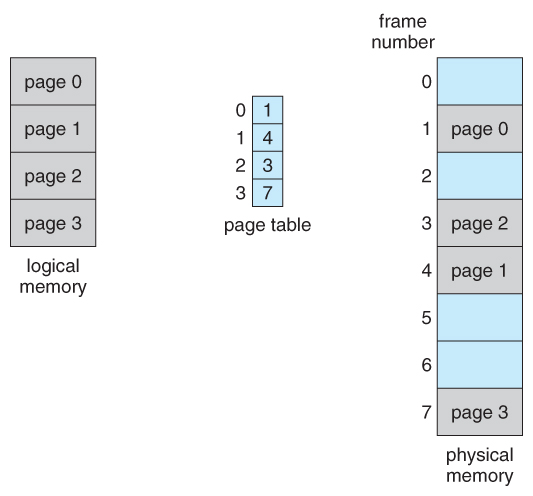

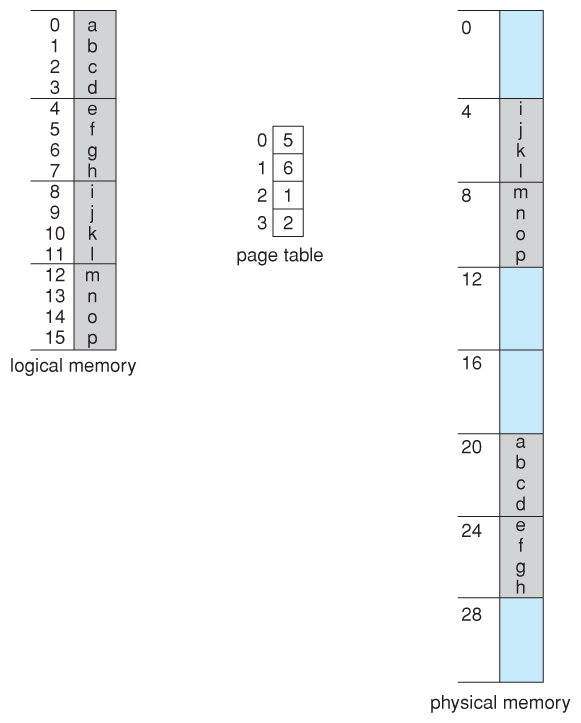

- The basic idea behind paging is to divide concrete retention into a number of equal sized blocks called frames , and to dissever a programs logical retention space into blocks of the same size chosen pages.

- Whatsoever folio ( from any process ) can exist placed into any available frame.

- The page tabular array is used to await upward what frame a particular page is stored in at the moment. In the following example, for instance, page 2 of the program's logical memory is currently stored in frame 3 of physical memory:

Figure viii.10 - Paging hardware

Figure 8.11 - Paging model of logical and physical memory

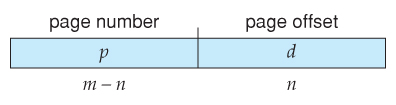

- A logical address consists of 2 parts: A page number in which the address resides, and an offset from the beginning of that page. ( The number of bits in the page number limits how many pages a single process tin address. The number of bits in the offset determines the maximum size of each folio, and should correspond to the system frame size. )

- The page table maps the page number to a frame number, to yield a physical address which also has two parts: The frame number and the get-go within that frame. The number of bits in the frame number determines how many frames the organization can address, and the number of bits in the starting time determines the size of each frame.

- Page numbers, frame numbers, and frame sizes are determined by the architecture, but are typically powers of 2, allowing addresses to be separate at a certain number of bits. For case, if the logical address size is two^k and the page size is 2^northward, then the high-order k-north bits of a logical address designate the page number and the remaining n $.25 represent the offset.

- Note too that the number of bits in the page number and the number of bits in the frame number do not take to be identical. The quondam determines the address range of the logical address space, and the latter relates to the physical address space.

- ( DOS used to use an addressing scheme with 16 fleck frame numbers and 16-scrap offsets, on hardware that only supported 24-bit hardware addresses. The event was a resolution of starting frame addresses effectively than the size of a single frame, and multiple frame-offset combinations that mapped to the same physical hardware accost. )

- Consider the post-obit micro example, in which a procedure has 16 bytes of logical retentivity, mapped in 4 byte pages into 32 bytes of concrete memory. ( Presumably some other processes would exist consuming the remaining sixteen bytes of physical memory. )

Figure 8.12 - Paging case for a 32-byte retention with 4-byte pages

- Note that paging is like having a table of relocation registers, ane for each page of the logical memory.

- There is no external fragmentation with paging. All blocks of physical memory are used, and in that location are no gaps in between and no problems with finding the right sized pigsty for a particular chunk of memory.

- There is, however, internal fragmentation. Memory is allocated in chunks the size of a page, and on the boilerplate, the last page will only exist one-half full, wasting on the boilerplate half a folio of memory per process. ( Possibly more than, if processes keep their code and data in carve up pages. )

- Larger page sizes waste matter more memory, merely are more efficient in terms of overhead. Modern trends take been to increment page sizes, and some systems even have multiple size pages to attempt and make the best of both worlds.

- Page table entries ( frame numbers ) are typically 32 bit numbers, allowing access to 2^32 physical folio frames. If those frames are 4 KB in size each, that translates to 16 TB of addressable physical memory. ( 32 + 12 = 44 bits of physical address space. )

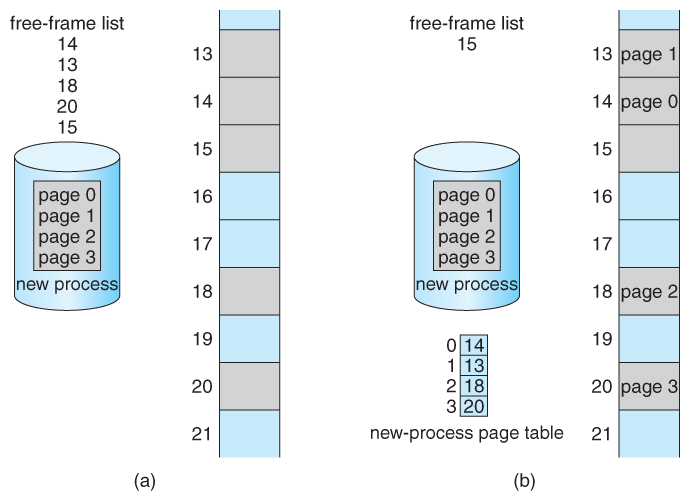

- When a process requests retentivity ( due east.g. when its code is loaded in from disk ), free frames are allocated from a free-frame list, and inserted into that procedure'due south page tabular array.

- Processes are blocked from accessing anyone else's memory considering all of their memory requests are mapped through their page tabular array. At that place is no way for them to generate an accost that maps into any other procedure's memory space.

- The operating system must keep track of each individual process's page table, updating it whenever the process'southward pages get moved in and out of memory, and applying the right page table when processing system calls for a detail process. This all increases the overhead involved when swapping processes in and out of the CPU. ( The currently agile page table must be updated to reflect the process that is currently running. )

Figure viii.13 - Free frames (a) earlier allocation and (b) after resource allotmenteight.5.2 Hardware Support

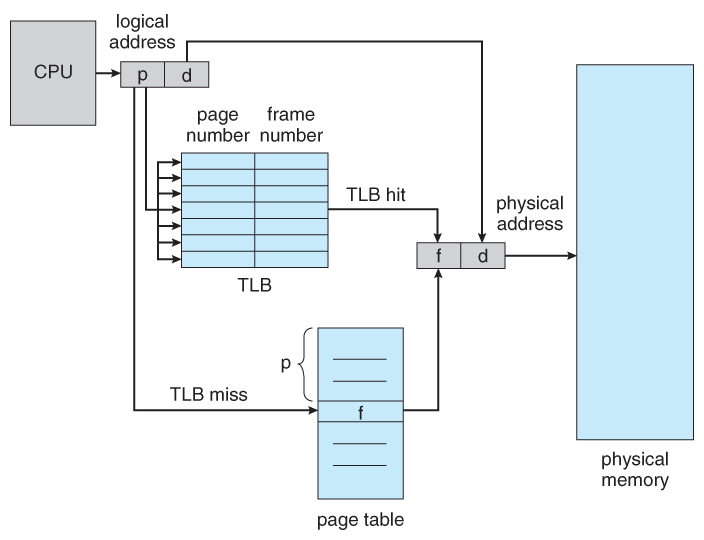

- Folio lookups must be done for every memory reference, and whenever a process gets swapped in or out of the CPU, its folio table must exist swapped in and out too, forth with the instruction registers, etc. It is therefore appropriate to provide hardware back up for this operation, in order to brand information technology as fast equally possible and to make process switches as fast equally possible too.

- I option is to use a set of registers for the page tabular array. For example, the December PDP-11 uses sixteen-bit addressing and 8 KB pages, resulting in just 8 pages per procedure. ( It takes 13 bits to address 8 KB of offset, leaving only 3 bits to define a page number. )

- An alternate option is to store the page tabular array in main memory, and to use a single register ( called the page-table base register, PTBR ) to record where in memory the folio table is located.

- Process switching is fast, because only the single register needs to be changed.

- Withal retentiveness access just got half equally fast, because every memory access at present requires ii retentiveness accesses - One to fetch the frame number from memory and then another one to admission the desired memory location.

- The solution to this problem is to use a very special high-speed memory device called the translation look-bated buffer, TLB.

- The benefit of the TLB is that information technology can search an entire table for a cardinal value in parallel, and if it is establish anywhere in the table, then the respective lookup value is returned.

Figure 8.fourteen - Paging hardware with TLB

- The TLB is very expensive, however, and therefore very minor. ( Not large enough to hold the entire page tabular array. ) It is therefore used as a cache device.

- Addresses are commencement checked against the TLB, and if the info is not there ( a TLB miss ), then the frame is looked up from principal memory and the TLB is updated.

- If the TLB is total, and so replacement strategies range from least-recently used, LRU to random.

- Some TLBs allow some entries to be wired down , which means that they cannot exist removed from the TLB. Typically these would exist kernel frames.

- Some TLBs store address-space identifiers, ASIDs , to keep runway of which process "owns" a particular entry in the TLB. This allows entries from multiple processes to exist stored simultaneously in the TLB without granting ane process access to some other process'south retentivity location. Without this characteristic the TLB has to exist flushed clean with every process switch.

- The percent of time that the desired data is found in the TLB is termed the hitting ratio .

- ( Eighth Edition Version: ) For case, suppose that it takes 100 nanoseconds to access chief memory, and just 20 nanoseconds to search the TLB. Then a TLB striking takes 120 nanoseconds total ( 20 to notice the frame number and then some other 100 to get get the data ), and a TLB miss takes 220 ( 20 to search the TLB, 100 to go get the frame number, and so another 100 to become become the information. ) So with an lxxx% TLB hit ratio, the average retention access fourth dimension would be:

0.80 * 120 + 0.20 * 220 = 140 nanoseconds

for a forty% slowdown to get the frame number. A 98% hitting charge per unit would yield 122 nanoseconds boilerplate access time ( you should verify this ), for a 22% slowdown.

- ( Ninth Edition Version: ) The ninth edition ignores the 20 nanoseconds required to search the TLB, yielding

0.80 * 100 + 0.20 * 200 = 120 nanoseconds

for a 20% slowdown to get the frame number. A 99% hit rate would yield 101 nanoseconds average access fourth dimension ( you should verify this ), for a 1% slowdown.

viii.5.three Protection

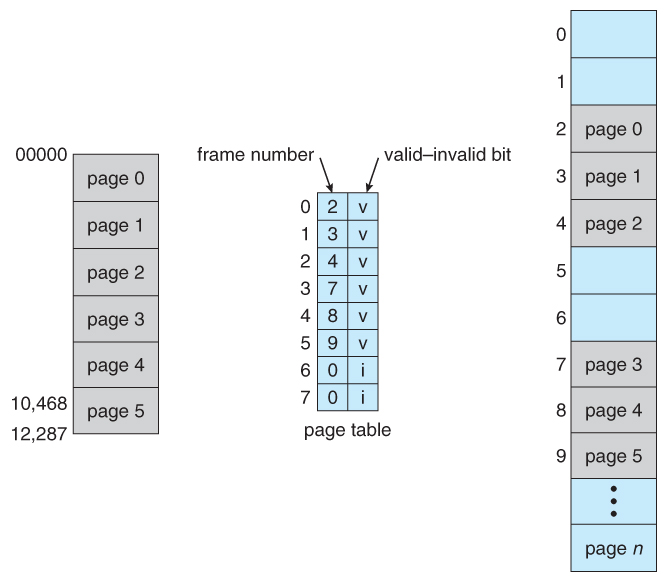

- The page table tin can also help to protect processes from accessing retention that they shouldn't, or their own retention in means that they shouldn't.

- A bit or bits tin can exist added to the page table to classify a page equally read-write, read-only, read-write-execute, or some combination of these sorts of things. Then each retention reference can be checked to ensure it is accessing the memory in the appropriate way.

- Valid / invalid bits tin be added to "mask off" entries in the page tabular array that are not in employ past the current process, as shown past example in Figure 8.12 beneath.

- Note that the valid / invalid $.25 described in a higher place cannot block all illegal memory accesses, due to the internal fragmentation. ( Areas of memory in the last folio that are not entirely filled by the process, and may comprise data left over by whoever used that frame last. )

- Many processes exercise non apply all of the page table available to them, particularly in modern systems with very large potential page tables. Rather than waste product retention by creating a total-size page table for every process, some systems utilize a folio-table length register, PTLR , to specify the length of the page tabular array.

Figure 8.15 - Valid (v) or invalid (i) fleck in page tableviii.5.4 Shared Pages

- Paging systems can arrive very easy to share blocks of memory, past simply duplicating page numbers in multiple folio frames. This may be done with either code or data.

- If lawmaking is reentrant , that means that it does not write to or alter the lawmaking in any mode ( it is non cocky-modifying ), and it is therefore condom to re-enter it. More importantly, it ways the lawmaking tin can exist shared by multiple processes, so long equally each has their ain copy of the data and registers, including the didactics register.

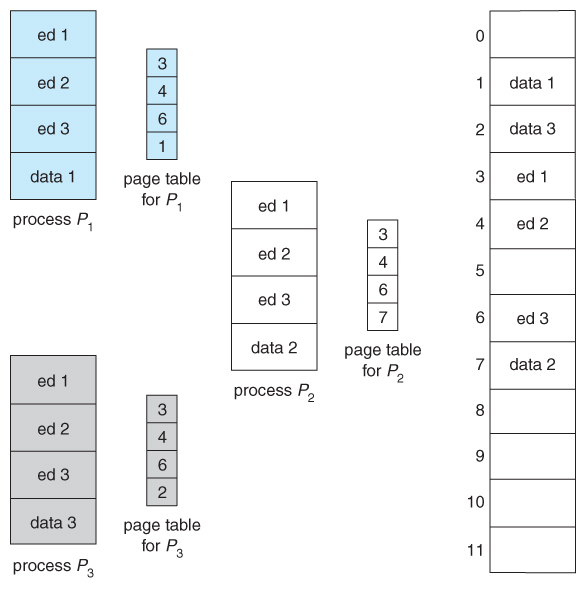

- In the instance given below, 3 unlike users are running the editor simultaneously, but the code is merely loaded into retentiveness ( in the page frames ) one fourth dimension.

- Some systems also implement shared memory in this fashion.

Figure viii.sixteen - Sharing of code in a paging surroundings

8.6 Structure of the Page Table

eight.6.i Hierarchical Paging

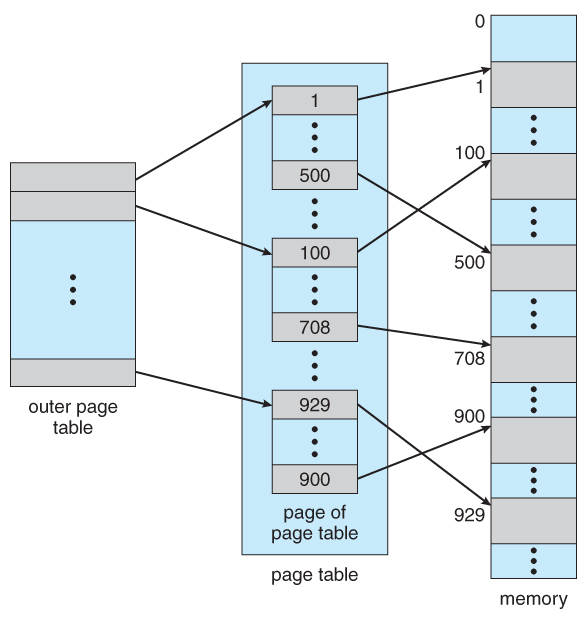

- Most modern reckoner systems support logical accost spaces of ii^32 to 2^64.

- With a two^32 address infinite and 4K ( 2^12 ) folio sizes, this exit 2^20 entries in the page tabular array. At 4 bytes per entry, this amounts to a 4 MB page table, which is too big to reasonably keep in contiguous retentivity. ( And to bandy in and out of retentivity with each procedure switch. ) Note that with 4K pages, this would take 1024 pages merely to hold the folio table!

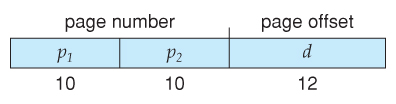

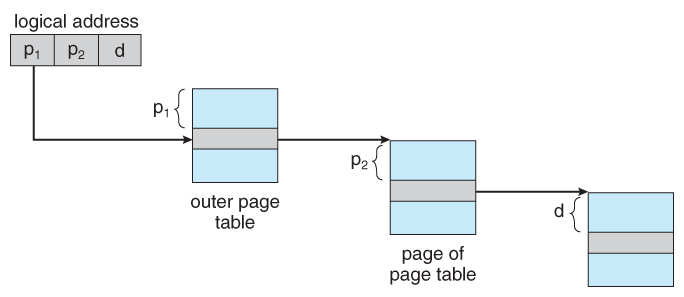

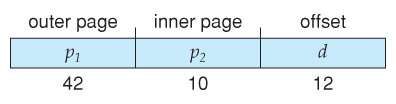

- 1 option is to apply a two-tier paging system, i.due east. to page the folio table.

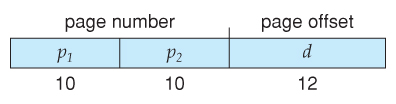

- For example, the 20 $.25 described to a higher place could be broken down into two 10-bit page numbers. The first identifies an entry in the outer page table, which identifies where in memory to find one page of an inner page table. The second ten bits finds a specific entry in that inner folio tabular array, which in turn identifies a particular frame in concrete memory. ( The remaining 12 bits of the 32 flake logical address are the first within the 4K frame. )

Figure eight.17 A two-level page-table scheme

Figure 8.18 - Address translation for a two-level 32-bit paging architecture

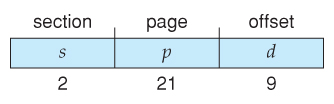

- VAX Architecture divides 32-bit addresses into iv equal sized sections, and each page is 512 bytes, yielding an address form of:

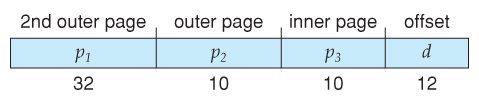

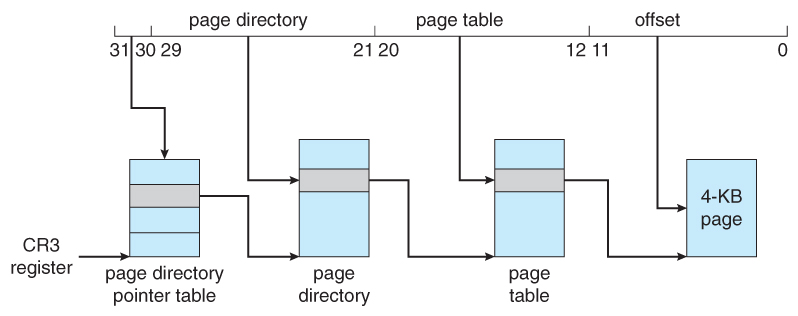

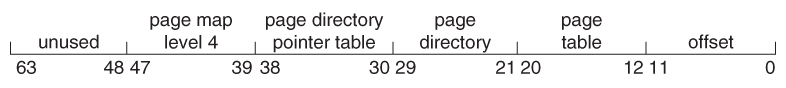

- With a 64-flake logical address space and 4K pages, there are 52 bits worth of page numbers, which is still as well many fifty-fifty for two-level paging. One could increment the paging level, only with 10-bit page tables information technology would take vii levels of indirection, which would be prohibitively slow memory access. So some other arroyo must be used.

64-bits 2-tiered leaves 42 bits in outer table

Going to a fourth level still leaves 32 bits in the outer table.

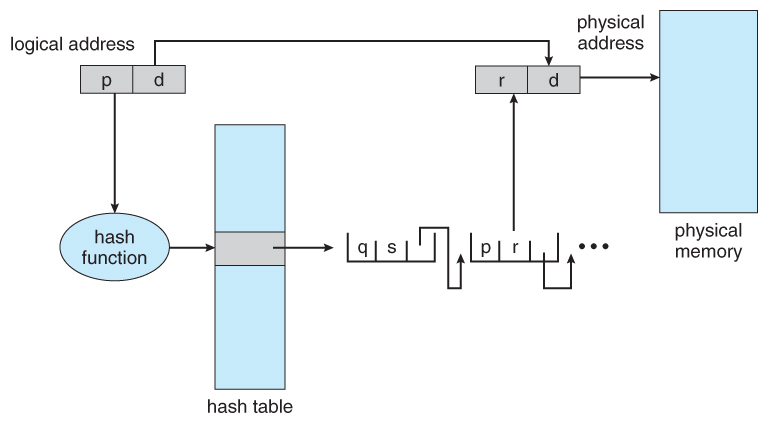

8.6.2 Hashed Page Tables

- I common data structure for accessing data that is sparsely distributed over a broad range of possible values is with hash tables . Effigy 8.16 below illustrates a hashed page tabular array using chain-and-saucepan hashing:

Figure eight.19 - Hashed page tableviii.6.3 Inverted Page Tables

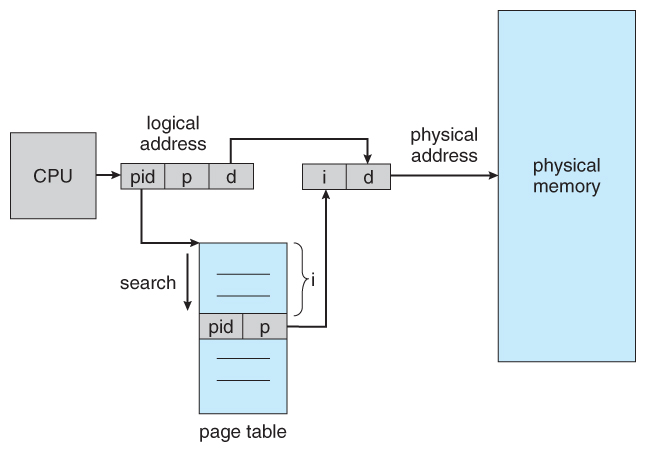

- Some other approach is to use an inverted page table . Instead of a table listing all of the pages for a particular procedure, an inverted folio tabular array lists all of the pages currently loaded in memory, for all processes. ( I.e. at that place is one entry per frame instead of i entry per page . )

- Admission to an inverted page tabular array can be irksome, as it may be necessary to search the entire tabular array in order to find the desired page ( or to detect that it is non in that location. ) Hashing the table can aid speedup the search process.

- Inverted folio tables prohibit the normal method of implementing shared memory, which is to map multiple logical pages to a common physical frame. ( Because each frame is now mapped to one and simply one process. )

Effigy 8.20 - Inverted page table

eight.vi.four Oracle SPARC Solaris ( Optional, New Department in 9th Edition )

eight.7 Instance: Intel 32 and 64-chip Architectures ( Optional )

8.vii.1.1 IA-32 Sectionalization

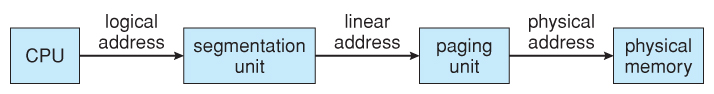

- The Pentium CPU provides both pure segmentation and segmentation with paging. In the latter instance, the CPU generates a logical accost ( segment-starting time pair ), which the partition unit converts into a logical linear address, which in plow is mapped to a concrete frame by the paging unit of measurement, as shown in Figure viii.21:

Figure 8.21 - Logical to physical address translation in IA-32

8.7.1.ane IA-32 Sectionalization

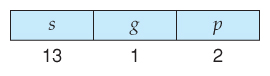

- The Pentium architecture allows segments to be as large as 4 GB, ( 24 $.25 of commencement ).

- Processes can have as many as 16K segments, divided into two 8K groups:

- 8K private to that item procedure, stored in the Local Descriptor Tabular array, LDT.

- 8K shared amid all processes, stored in the Global Descriptor Table, GDT.

- Logical addresses are ( selector, offset ) pairs, where the selector is fabricated up of xvi bits:

- A 13 chip segment number ( up to 8K )

- A 1 bit flag for LDT vs. GDT.

- ii bits for protection codes.

- The descriptor tables incorporate eight-byte descriptions of each segment, including base and limit registers.

- Logical linear addresses are generated by looking the selector upwardly in the descriptor tabular array and adding the appropriate base address to the offset, as shown in Figure 8.22:

Effigy 8.22 - IA-32 segmentation8.7.1.2 IA-32 Paging

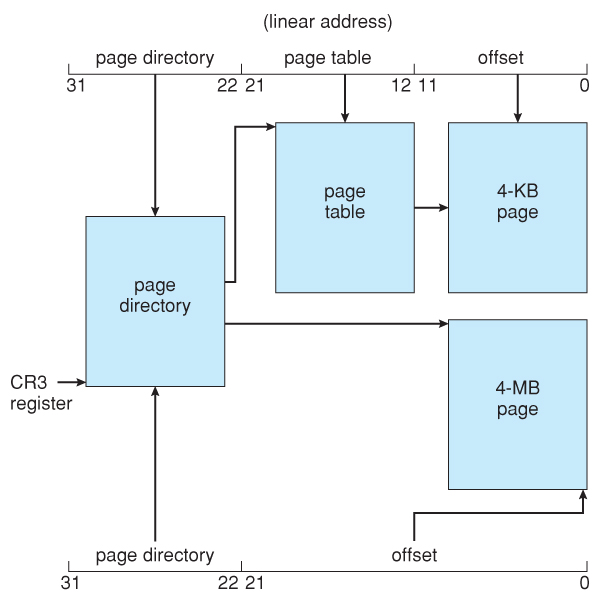

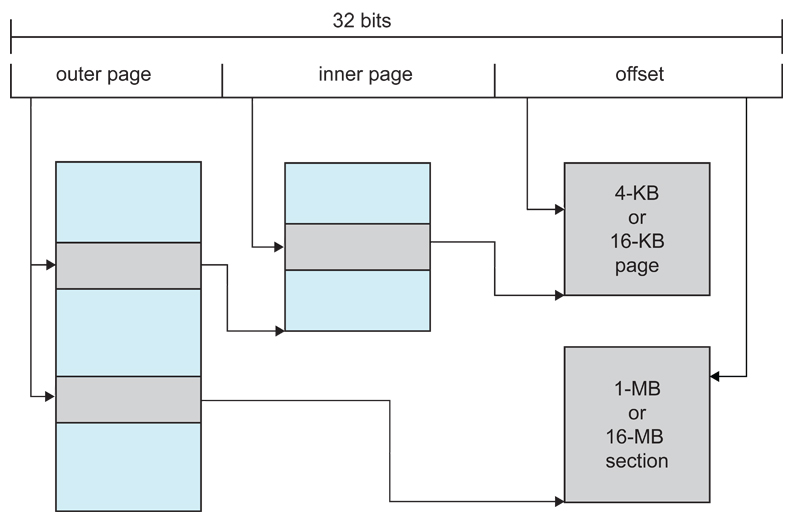

- Pentium paging normally uses a two-tier paging scheme, with the first 10 bits being a folio number for an outer page tabular array ( a.g.a. page directory ), and the next 10 bits being a page number inside one of the 1024 inner page tables, leaving the remaining 12 $.25 every bit an offset into a 4K page.

- A special chip in the page directory tin can indicate that this page is a 4MB page, in which example the remaining 22 bits are all used as offset and the inner tier of page tables is not used.

- The CR3 register points to the page directory for the current process, every bit shown in Figure viii.23 below.

- If the inner folio tabular array is currently swapped out to disk, then the page directory will take an "invalid bit" set, and the remaining 31 bits provide data on where to find the swapped out page tabular array on the disk.

Figure 8.23 - Paging in the IA-32 compages.

Figure 8.24 - Page address extensions.8.vii.two x86-64

Figure 8.25 - x86-64 linear address.

8.8 Example: ARM Architecture ( Optional )

Figure 8.26 - Logical address translation in ARM.

Erstwhile 8.seven.3 Linux on Pentium Systems - Omitted from the 9th Edition

- Because Linux is designed for a wide variety of platforms, some of which offer just limited support for segmentation, Linux supports minimal partition. Specifically Linux uses only 6 segments:

- Kernel code.

- Kerned information.

- User code.

- User information.

- A task-state segment, TSS

- A default LDT segment

- All processes share the same user code and data segments, because all process share the same logical address space and all segment descriptors are stored in the Global Descriptor Table. ( The LDT is mostly non used. )

- Each process has its ain TSS, whose descriptor is stored in the GDT. The TSS stores the hardware land of a procedure during context switches.

- The default LDT is shared by all processes and mostly not used, but if a process needs to create its own LDT, it may do so, and employ that instead of the default.

- The Pentium compages provides 2 bits ( 4 values ) for protection in a segment selector, but Linux only uses 2 values: user style and kernel style.

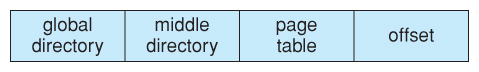

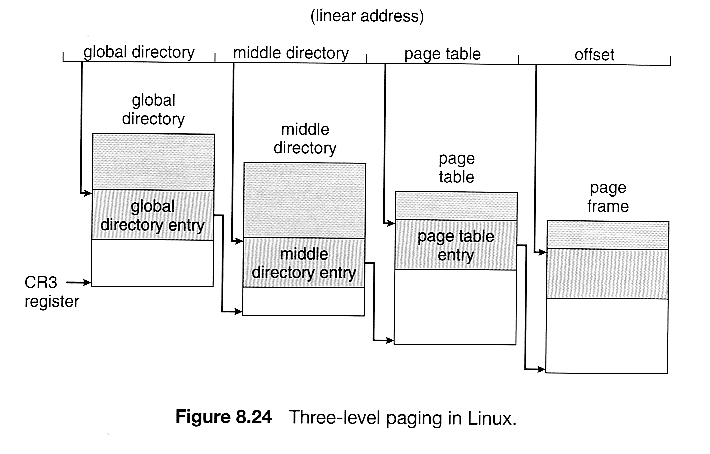

- Considering Linux is designed to run on 64-scrap as well every bit 32-flake architectures, information technology employs a three-level paging strategy as shown in Figure 8.24, where the number of $.25 in each portion of the address varies by architecture. In the case of the Pentium architecture, the size of the middle directory portion is fix to 0 bits, effectively bypassing the middle directory.

eight.8 Summary

-

( For a fun and easy explanation of paging, yous may want to read about The Paging Game. )

What Do Base And Limit Registers Do,

Source: https://www.cs.uic.edu/~jbell/CourseNotes/OperatingSystems/8_MainMemory.html

Posted by: salothisheatch59.blogspot.com

0 Response to "What Do Base And Limit Registers Do"

Post a Comment